As I touched on in this post, I recently had to install Ubuntu from scratch after an attempted 18.04 -> 19.04 upgrade left me with an irrecoverably bricked system.

We’re talking badly bricked: a GRUB failure that even the live USB couldn’t repair, a broken package manager, etc.

After half a day spent travelling down the usual rabbit-hole of reading threads on AskUbuntu etc. I decided that a clean install was the way forward.

Over the course of ten years of daily Ubuntu use, I have had to reinstall the system several times.

As a general rule, each time is more painful than the last. This is because:

- Doing the same thing over and over again gets increasingly annoying

- Life gets increasingly busy, increasing the aforementioned annoyance of repeating a circular process.

- Being self-employed, time = money. Not being able to work on deliverables because my workstation isn’t operable is not viable.

- The longer my system remains stable and operable, the configuration changes I have made to it accumulate. Thus, although the time period between fresh installs has increased, the difficulty of starting from scratch has consequently increased.

Every time I install from scratch I have to:

- Reinstall every program that I added to stock Lubuntu (after Lubuntu’s decision to migrate from LXDE to LXQt I have moved to stock Ubuntu, but Lubuntu was my go-to distro for years).

- Hunt down whatever backups I have made of system configuration files that I have changed. Hard-coding keyboard shortcuts into the Openbox configuration file was a favorite of mine for many years; I at least generally remembered to back up periodically via a few Bash scripts I wrote and scheduled as Cron jobs.

- Tweak the power management settings to prevent the annoying “screen goes black after ten minutes” problem. The bane in the lives of Ubuntu movie and Netflix fans.

- Reinstall all Windows and Android virtual machines in VMWare and Genymotion. An additional annoyance from a business perspective because I use these almost solely for benchmarking Windows/Android applications.

- Make a bewildering variety of neurotic changes to tweak default system behaviors, such as configuring the file manager to open folders with one, rather than two, clicks.

- The list goes on and on.

I have attempted to self-justify this as a sort of “spring clean” of my system that I do every couple of years (and a good way to learn how to deploy my preferred Linux desktop environment quickly), but it’s fair to say that at this point the annoyance of doing this outweighs any benefits that I can conjure in my mind.

I have considered approaches like developing my own Linux distro to speed up the process a little, but the Linux desktop world moves so quickly than even if I were to restore from that, between required upgrades and packages that were no longer maintained it probably would probably just add complication to the process.

My Previous Backup Approach

To be clear, I have always made sure to have some sort of backup system in place.

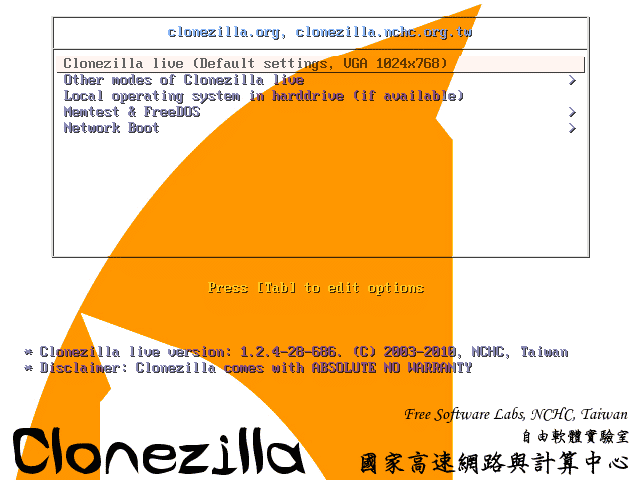

However, these often relied upon me remembering do to somewhat complicated things like take a live Clonezilla image at regular intervals via a live USB (I am still hopeful that I can figure out how to do this automatically over the LAN at some point and automate the process). As I have learned the hard way, any backup strategy that relies on human intervention, without redundancy, is doomed for failure.

Although the images I took were viable (yes, I have done a test restore — an oft-skipped part of a thorough backup process so often skipped that there is a separate International Verify Your Backups Day), given the extent and frequency with which I change my system, the lag between the snapshots was often unacceptably long and I opted to simply choose a fresh install instead.

It’s often quicker than attempting to salvage a painfully corrupted system, but is certainly not an approach I would recommend.

Introducing: Meta Backup Strategy V2

I’m calling the approach that I’m documenting primarily for my own interest here “meta backup strategy V2” and it’s based loosely around this wonderfully simple guide. As a brief foray into the world of technical writing, I am working on actually properly documenting this as a side project.

It’s basically all the things I have learned about over the years and said that I would do. But actually implemented with enough built-in safeguards that it should actually work!

As I’ve been developing it, I’ve come to realize that my backup strategy has traditionally sought to provide recovery from two disparate data loss strategies of enormously varying likelihood.

These are:

- Scenario one: I attempt to upgrade Ubuntu / install a package that subsequently bricks my system. Besides LTS -> bleeding edge distro updates, other causes have historically included installing bad graphics drivers. Likelihood: reasonably probable.

- Scenario two: Armageddon breaks out. AWS and the internet as we know it have both been obliterated. To make matters worse, in the ensuing chaos, my apartment has burnt down. That external hard drive sitting in a secure offsite location located in a vault dozens of meters beneath Jerusalem remains the only hope I have of regaining that scan I took of my old passport. Likelihood: infinitesimally improbable.

I realized that my attempts to develop an extremely thorough backup strategy unintentionally dealt more with scenario two than scenario one.

(I’m addressing here bulk data loss rather than more technical scenarios like accidental deletions and bit rot; I mitigate against the latter by keeping at least one long historic snapshot of my key data; the latter is something I think is of much more important in the enterprise environment).

Examples:

- Although copying your web hosting data to two redundant storage systems, as the 3-2-1 Backup Rule calls for, is a commendable best practice, it is highly unlikely that a professional web hosting company, or SaaS service provider, will go out of business (nor provide customers with access to its own backups).

- It is astronomically unlikely that whatever you have backed up to AWS S3 will not be accessible in the event that you need to recover from it.

MBS V2 is much more concerned with providing the ability to reliably and quickly restore my beloved Linux desktop in the event of scenario one so that the system can remain alive indefinitely and I never have to interrupt a day’s work to restore the base of a viable workstation.

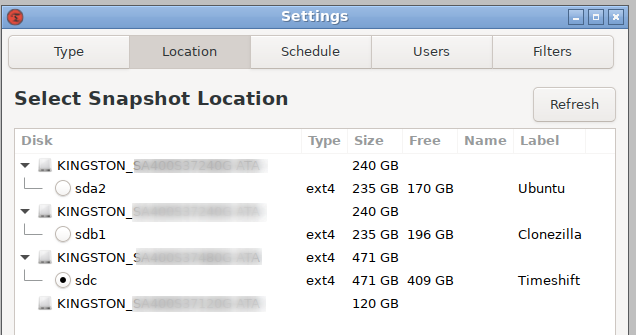

The main tools I am currently using are Timeshift (a GUI-based system restore point creator based on incremental backups created via Rsync) and Clonezilla.

The full disk backup-taking process in full is that:

- I take automated daily, weekly, and monthly snapshots from Timeshift and store these on the backup drive (1TB; main drive 250GB) of my desktop. I’ve thought about scripting a means of duplicating this, over SSH, to my old laptop / “server” but reckon the slight redundancy this would provide will be unlikely to ever be useful.

- In the event of a bricked system, I should be able to roll back to either of these snapshot via Timeshift’s CLI from within a live environment.

- Because I don’t entirely trust anything that runs aboard a live system, I also take irregular Clonezilla backups and copy these to two local storage environments: the backup drive and an external hard drive.

- I also want to push a copy of one good Clonezilla image to S3, but haven’t gotten around to doing so yet. The upload, which would have to be done over something like s3cmd because it’s well above the multipart upload floor, would likely take a couple of days to complete.

- I have also duplicated my current system (two SSDs, one containing Ubuntu and another containing Linux) onto a cheap 1TB hard drive which I store in an anti-static bag and shock-proof case at home (never forget the possibility of earthquakes!). In the event that something fails and I need to immediately get up and running again, I can simply attach this to the motherboard and get computing. Having this backup alone would have saved me untold frustrating the past few years.

I also used to run a simple bash script through a cron job several times per day that would capture important configuration files and save them into a backup folder in my cloud storage. The idea was that it would provide some files to copy and paste into a temporary system as a softer and quicker means of disaster recovery than inserting new hard drives. The last version of this was:

#!/bin/bash

cp -r /home/daniel/.config/libreoffice/4/user ~/pCloudDrive/Technical/Backups/Automatic/AlmaDesktop &

cp -a /home/daniel/Scripts ~/pCloudDrive/Technical/Backups/Automatic/Alma63Desktop &

cp /home/daniel/.config/openbox/lubuntu-rc.xml ~/pCloudDrive/Technical/Backups/Automatic/AlmaDesktop &

cp -r /home/daniel/.config/autokey ~/pCloudDrive/Technical/Backups/Automatic/AlmaDesktop &

# cp -r /home/daniel/Desktop/* ~/pCloudDrive/Technical/Backups/Automatic/AlmaDesktop &

exitCaptured in the above should be:

- My Libreoffice dictionary and templates

- My Lubuntu Openbox configuration (now lxde-rc.xml in the same directory)

- My Autokey-GTK user folder, which also backs up my phrases and keybindings.

- Desktop launch icons (this really required more work, as it would also obviously often inadvertently scoop up whatever else I had lying around my desktop at the time the script ran!)

I believe that this should be enough to ensure that I never, ever, have to reinstall Ubuntu. again

(In the case of a mechanical disk failure, which I have yet to experience, I would simply install a new SSD. If I had notice that this was impending, through noticing bad blocks, etc, I could use the dd CLI to quickly duplicate the drive. If not, I could try to either recover the Timeshift snapshot from a live USB (I think!), or alternatively restore from the latest Clonezilla image).

‘Scenario One’ Recovery Planning

As I mentioned, over time, I’ve come to realize that historically all my problems with backups and having to reinstall Ubuntu have come from the system failing. Having thorough backups of everything you own in the cloud and on SaaS platforms is a ‘nice to have’, and responsible, but highly unlikely to ever actually be required for individual users with small repositories of user data kept in the cloud.

However, for the sake of thoroughness, I still include it in the overall backup approach.

I currently:

- Periodically push automated snapshots of my main cloud storage system to S3. (1 x offsite redundancy in the cloud)

- Periodically compress and store snapshots of my main cloud storage system to my external SSD (1 x onsite redundancy)

I follow roughly the same procedure for:

- My web hosting: which goes to S3 and my external hard drive.

- All “mission critical” SaaS platforms I use, including bookkepping platforms, a to-do list manager and, of course, G-Suite (via Google Takeouts). These now just go to S3.

Work Left To Do

- In order for my cloud backup to comply with the 3-2-1 Backup Rule, I still need to find an efficient way to periodically download all my S3 buckets and store snapshots somewhere on-site.

- I need to push at least one good Clonezilla full-disk image (ideally verified) to S3.

- I really want to deploy a more professional on-site server at some point (due to space constraints, this is currently an old laptop with a measly 500GB capacity).

- This should both be RAID-based and huge so that I can start taking historical (as well as ‘latest’) snapshots of everything that is mirrored on-site, including:

- Full disk Clonezilla images of my desktop

- Periodic snapshots of my entire S3 infrastructure. Because all the cloud stuff winds up there, this would be enough to get me ‘3-2-1-compliant’ on that front.

- Such a server should have large capacity (I’m shooting for ≥4 TB). To be extra safe, I should operate a server of similar capacity somewhere else I have access to offsite, so that even this medium has instant redundancy.

- If I ever manage to achieve all the above, I will feel truly confident in my backup / disaster recovery strategy.